Bandits can trick you about your outcomes

Posted on Wed 12 February 2020 in blog

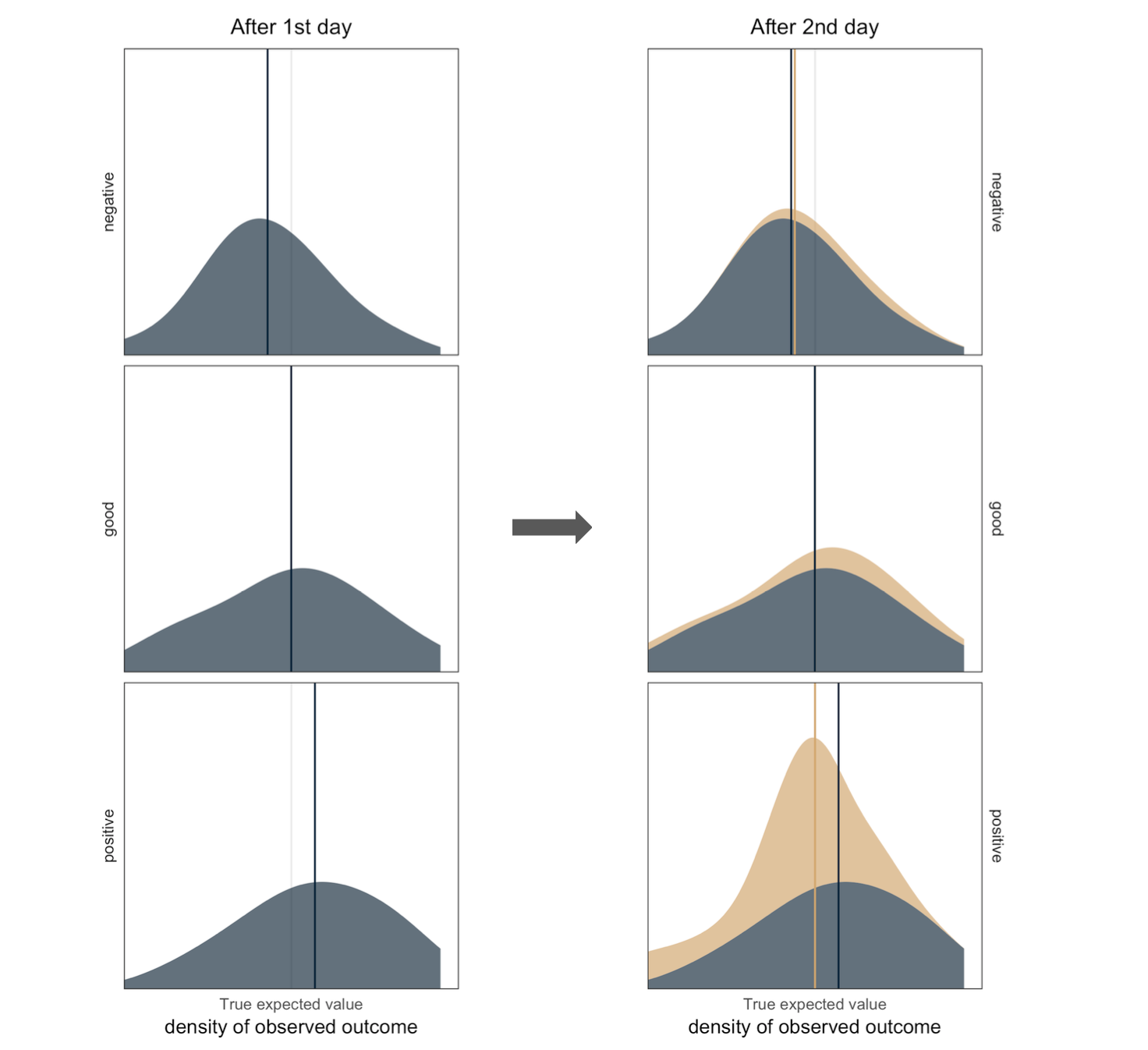

My story is about multi-armed bandits and why data collected with bandits lead to underestimated means. If you work with online data (webshops, ads, mobile apps, etc.), using multi-armed bandit algorithms can speed up your testing process. However, measuring the outcomes of the different versions is not that straight-forward. This post is published at Emarsys Craftlab, you can read it there.